AI Agents Can’t Handle Everything: LangChain’s Research Exposes a Major Flaw

- Michal Kosinski

- Feb 12, 2025

- 4 min read

Artificial intelligence (AI) has made remarkable strides in recent years, enabling AI agents to perform complex tasks across various domains. From customer support to enterprise automation, AI-driven systems have become indispensable tools in many industries. However, despite their potential, AI agents exhibit significant limitations when overloaded with too many tasks and tools.

Recent research conducted by LangChain, a company specializing in AI orchestration frameworks, sheds light on this issue. Their study focused on the performance limits of single AI agents when tasked with multiple responsibilities. The results indicate that AI agents struggle with context retention, tool usage, and instruction compliance when exposed to high cognitive loads.

This article delves deep into LangChain’s findings, explores the implications of AI overload, and examines possible solutions such as multi-agent architectures. We will also discuss the historical evolution of AI agents, benchmark the performance of top language models, and analyze the future trajectory of AI automation.

The Evolution of AI Agents: From Basic Assistants to Complex Multi-Domain Systems

AI agents have evolved from simple rule-based systems to sophisticated models powered by large language models (LLMs). This transformation has been driven by advancements in machine learning, natural language processing (NLP), and reinforcement learning.

Historical Milestones in AI Agent Development

Year | Milestone | Description |

1956 | Dartmouth Conference | Considered the birth of AI, where the term "Artificial Intelligence" was coined. |

1966 | ELIZA | One of the first AI chatbots, mimicking human-like conversation using simple pattern recognition. |

1997 | IBM Deep Blue | Defeated chess world champion Garry Kasparov, demonstrating AI’s potential in complex problem-solving. |

2011 | IBM Watson | Won Jeopardy! against human champions, showcasing AI’s ability to process and analyze vast amounts of data. |

GPT-3 | OpenAI’s language model capable of generating human-like text across multiple domains. | |

2023 | GPT-4 & Claude-3 | Advanced LLMs capable of performing multi-turn reasoning and multimodal tasks. |

Despite these advancements, LangChain’s research highlights a fundamental weakness in AI agents: a single agent cannot effectively manage multiple domains without performance degradation.

LangChain’s Experiment: Stress-Testing AI Agents Under Heavy Load

LangChain designed a rigorous experiment to understand how AI agents handle increasing workloads. They used a ReAct-based AI agent, an architecture that combines reasoning and action-taking capabilities, making it ideal for evaluating multi-step tasks.

Testing Framework

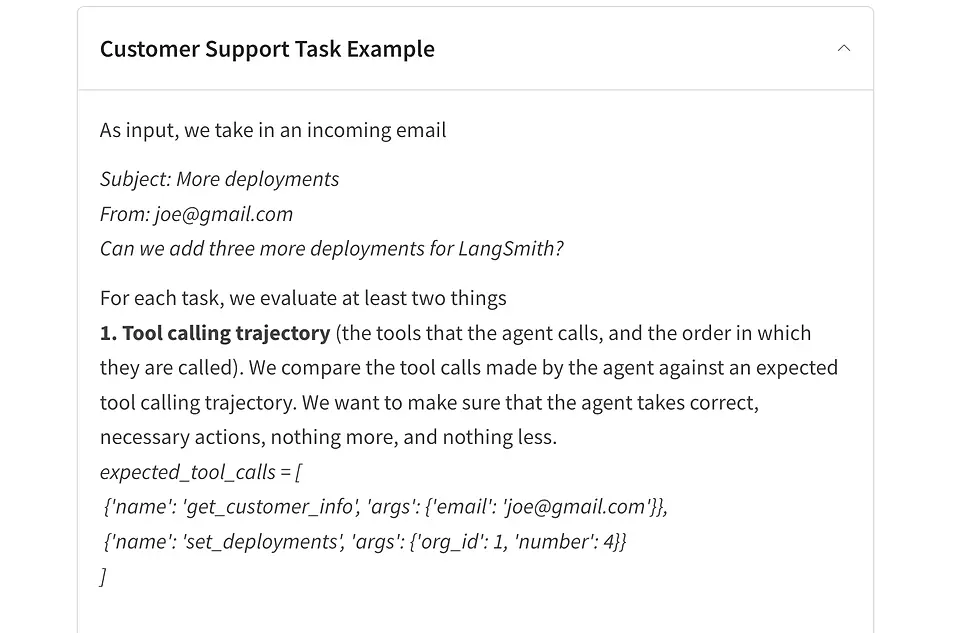

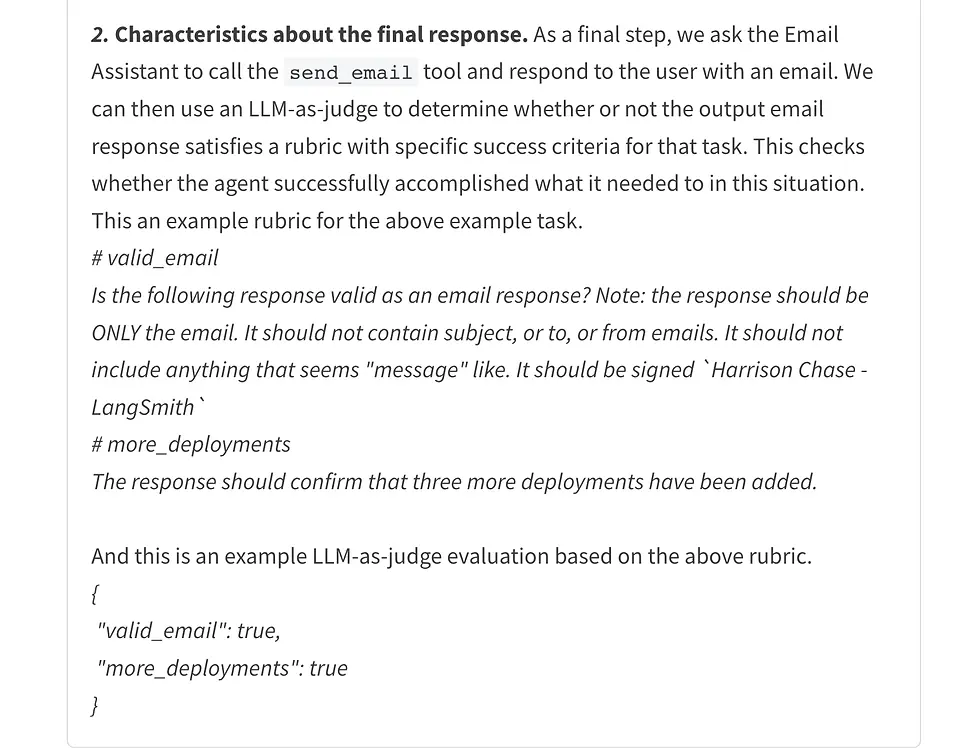

LangChain's experiment focused on two key functionalities:

Customer Support Agent

Answering customer queries.

Providing technical support.

Resolving issues using predefined tools.

Calendar Scheduling Agent

Managing meeting requests.

Scheduling appointments.

Handling conflicting schedules.

The evaluation was conducted using five different LLMs from OpenAI, Anthropic, and Meta.

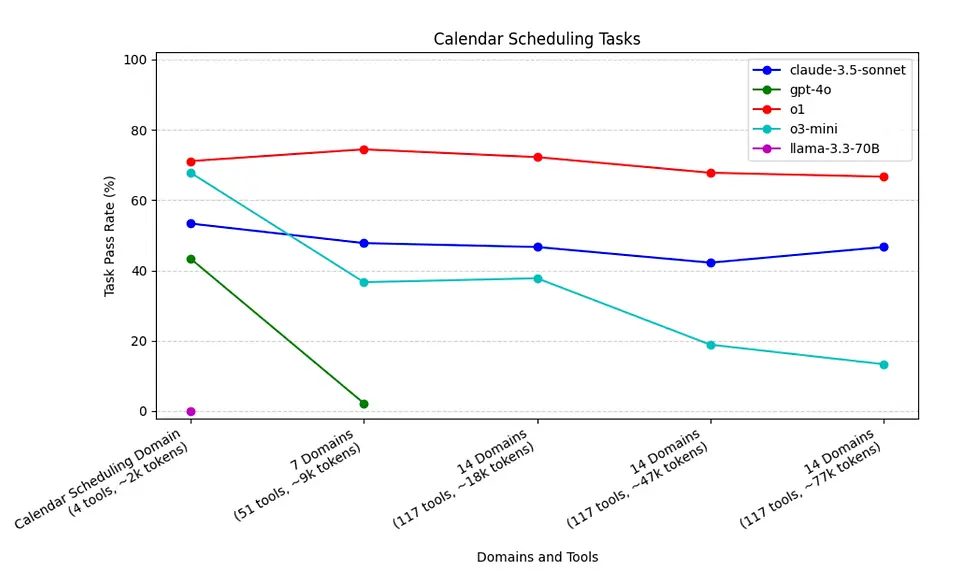

Benchmark Results: How AI Models Performed Under Load

Model | Developer | Baseline Performance (Few Tasks) | Performance Under Heavy Load | Common Failures |

GPT-4o | OpenAI | 92% | 2% (Severe drop at 7+ domains) | Instruction forgetfulness, tool misuse |

Claude 3.5 Sonnet | Anthropic | 89% | 38% | Struggled with long context windows |

Llama-3.3-70B | Meta | 85% | Failed (Missed all tool calls) | Inability to call required tools |

OpenAI o1 | OpenAI | 91% | 45% | Context retention issues |

OpenAI o3-mini | OpenAI | 84% | 30% | Performance declined with irrelevant tasks |

Key Observations

GPT-4o suffered the worst performance drop, with only a 2% success rate when handling seven or more domains.

Llama-3.3-70B failed completely when overloaded, forgetting to call the necessary tools for execution.

OpenAI o3-mini showed moderate degradation, performing well initially but dropping sharply when exposed to irrelevant tasks.

Claude 3.5 Sonnet and OpenAI o1 maintained the most stability, though both still suffered significant performance declines.

Why Do AI Agents Fail Under Heavy Workloads?

1. Cognitive Overload and Context Loss

LLMs rely on a context window to store and process relevant information. When the number of tasks increases, the model struggles to maintain focus.

Example: If an AI assistant is handling email responses, HR approvals, customer support, and technical troubleshooting, it may start confusing instructions between these domains, leading to errors.

2. Tool Invocation Failures

Most AI agents operate by invoking external tools (e.g., databases, APIs) to complete tasks. LangChain found that overloaded AI agents frequently forgot to call necessary tools, rendering them ineffective.

Example: Llama-3.3-70B failed to call the email sending tool, making it useless for responding to customer requests.

3. Task Prioritization Issues

Unlike humans, AI lacks intrinsic prioritization skills. When bombarded with multiple tasks, it struggles to determine which one requires immediate attention.

Example: An AI agent managing 10 simultaneous tasks may get stuck on trivial requests while ignoring critical support tickets.

The Multi-Agent Solution: A New Era of AI Collaboration

Given the limitations of single-agent systems, LangChain is now exploring multi-agent architectures as a solution. Instead of relying on one overloaded AI agent, tasks can be distributed among multiple specialized agents.

How Multi-Agent Systems Improve AI Performance

Feature | Single-Agent AI | Multi-Agent AI |

Task Handling | Limited to one agent | Distributed across multiple agents |

Error Recovery | High failure risk when overloaded | Agents collaborate to reduce failures |

Context Retention | Struggles with large workloads | Better context specialization per agent |

Scalability | Performance drops with more tasks | Easily scales with more agents |

Multi-agent AI mirrors human organizations, where individuals specialize in different tasks rather than handling everything alone.

The Future of AI Agents: Enhancing Performance and Reliability

1. Adaptive AI Models

Future AI agents should dynamically adjust their workload based on performance metrics, ensuring optimal efficiency.

2. Intelligent Task Prioritization

AI models should be designed to identify and prioritize high-impact tasks, ensuring critical functions are never ignored.

3. Hybrid AI-Human Collaboration

AI should work alongside humans, allowing for oversight in high-risk decisions such as legal compliance and financial management.

“The future of AI is not about replacing human intelligence but augmenting it. Multi-agent systems and human-AI collaboration will define the next phase of intelligent automation.”

The Path Forward for AI Development

The rapid evolution of AI presents both opportunities and challenges. While AI agents have revolutionized automation, LangChain’s findings highlight a crucial limitation: single-agent systems cannot scale indefinitely. As AI research progresses, organizations must explore multi-agent architectures to ensure reliability and efficiency.

For further insights into AI, Dr. Shahid Masood and the expert team at 1950.ai provide in-depth analysis on predictive AI, quantum computing, and advanced automation.

Comments