Inside Huawei’s Atlas 960: How China’s SuperPoDs Aim to Outscale Nvidia’s AI Empire

- Dr. Shahid Masood

- Sep 19, 2025

- 5 min read

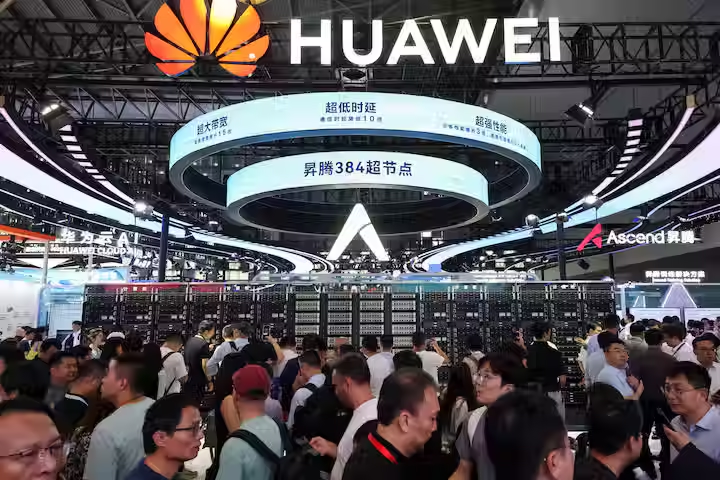

Huawei has unveiled what it calls the world’s most powerful SuperPoDs and SuperClusters, marking a decisive step in China’s effort to create self-sufficient AI infrastructure. At HUAWEI CONNECT 2025 in Shanghai, Rotating Chairman Eric Xu detailed the company’s newest high-performance computing platforms—the Atlas 950 and Atlas 960 SuperPoDs—and outlined long-term plans for proprietary Ascend AI chips, Kunpeng server chips, and high-bandwidth memory.

This article explores how Huawei’s strategy of tightly coupling cutting-edge interconnect technology with large-scale compute nodes positions it as a formidable rival to established players like Nvidia, while potentially reshaping the global AI ecosystem.

From SuperPoD to SuperCluster: Redefining Large-Scale Compute

A SuperPoD is essentially a single logical machine composed of multiple physical machines that learn, think, and reason as one. Huawei’s newly launched Atlas 950 and Atlas 960 SuperPoDs deliver unprecedented scale:

Product | NPUs (Neural Processing Units) | Launch Timeline | Key Differentiator |

Atlas 950 SuperPoD | 8,192 Ascend NPUs | Available 2026 | Industry-leading interconnect bandwidth |

Atlas 960 SuperPoD | 15,488 Ascend NPUs | Available 2027 | Double the compute of its predecessor |

Building on these SuperPoDs, Huawei has also introduced Atlas 950 SuperCluster with more than 500,000 Ascend NPUs and Atlas 960 SuperCluster with over one million NPUs, creating an interconnected fabric of compute resources.

These clusters aim to outperform all existing market alternatives, providing abundant computing power for rapid advancements in AI training, simulation, and inference at national and industrial scales.

The Interconnect Bottleneck and UnifiedBus 2.0

Scaling up compute nodes requires equally advanced interconnect technology. Traditional copper and optical cables are limited in their ability to maintain high-speed, low-latency connections across massive chip arrays.

Huawei’s solution is UnifiedBus, a proprietary interconnect protocol that enables seamless, high-throughput communication across SuperPoDs. The company released UnifiedBus 2.0 specifications at HUAWEI CONNECT 2025, inviting industry partners to develop components around it and create an open ecosystem.

According to Huawei, UnifiedBus 2.0 integrates three decades of networking expertise with new systems innovations to address long-distance, high-volume data transport—a critical bottleneck for large-scale AI infrastructure.

“SuperPoDs and SuperClusters powered by UnifiedBus are our answer to surging demand for computing, both today and tomorrow,” said Eric Xu.

Ascend AI Chips: A Roadmap of Rapid Iteration

Huawei’s announcement also shed light on its chip strategy, breaking years of silence about its semiconductor efforts. The company plans to follow a one-year release cycle, doubling compute with each iteration:

Ascend 910C launched in Q1 2025

Ascend 950 scheduled for 2026 (two variants)

Ascend 960 set for 2027

Ascend 970 expected in 2028

This roadmap signals not only technological ambition but also a commitment to predictable performance improvements for clients building on Huawei’s ecosystem.

High-Bandwidth Memory: Achieving Vertical Integration

Huawei confirmed it has developed its own proprietary high-bandwidth memory (HBM), a technology previously dominated by South Korea’s SK Hynix and Samsung Electronics. By integrating HBM into its chips, Huawei reduces reliance on external suppliers and optimizes the performance of Ascend processors under the high data-transfer demands of large AI models.

Industry observers note that this capability, combined with UnifiedBus interconnects, provides Huawei with end-to-end control over the data pipeline, a crucial differentiator for workloads like natural language processing, autonomous driving simulations, and large-scale recommendation systems.

The Rise of Supernodes: Building Blocks of Next-Gen AI Systems

Huawei is also introducing “supernodes”, a new class of compute racks designed for high-speed interconnection of chips. Each node houses thousands of NPUs and can be grouped into clusters or SuperPoDs.

The Atlas 950 and Atlas 960 are direct successors to the Atlas 900 (CloudMatrix 384), which used 384 Ascend 910C chips. This leap from hundreds to tens of thousands of NPUs per node demonstrates Huawei’s aggressive scaling strategy.

“Huawei is leveraging its strengths in networking, along with China’s advantages in power supply, to aggressively push supernodes and offset lagging chip manufacturing,” observed Wang Shen, data center infrastructure practice lead at Omdia.

Kunpeng Server Chips: Broadening the Compute Portfolio

Beyond AI accelerators, Huawei is also refreshing its Kunpeng server chips. New versions are planned for 2026 and 2028, further diversifying Huawei’s compute portfolio. By combining general-purpose compute with specialized AI hardware, Huawei positions itself as a one-stop provider for large-scale enterprise and government infrastructure.

Geopolitical Context: A Quietly Escalating Tech Rivalry

Huawei’s announcements come amid rising U.S.–China technology tensions. Chinese authorities have reportedly ordered top firms to halt purchases of Nvidia AI chips and cancel existing orders. In parallel, Washington maintains export controls on advanced chipmaking tools, limiting Huawei’s access to U.S. technologies.

Yet analysts argue that Huawei’s public show of strength signals growing confidence:

“Domestic advanced chip manufacturing capacity is no longer such a big constraint for marketing the product, and U.S. export controls are not really threatening this process anymore,” said Tilly Zhang, analyst at Gavekal Dragonomics.

This timing also coincides with a high-profile meeting between U.S. President Donald Trump and Chinese President Xi Jinping, underscoring how technology announcements can influence diplomatic posturing.

Comparative Performance: Huawei vs. Nvidia

Despite Huawei’s advances, insiders at Chinese tech firms acknowledge that Nvidia’s chips still outperform in many workloads. However, Huawei’s integrated approach—combining chips, memory, interconnect, and power infrastructure—could narrow the gap and offer a compelling domestic alternative for China-based enterprises.

The key differentiators include:

Vertical Integration: From chips to interconnect protocols to memory.

Scale: SuperPoDs with tens of thousands of NPUs, SuperClusters with over one million NPUs.

Roadmap Certainty: One-year cycles with doubling compute power.

Geopolitical Safety: Reduced dependence on U.S.-restricted technologies.

Applications Across Industries

Huawei’s SuperPoDs and SuperClusters are positioned not just as research platforms but as industrial engines. Potential applications include:

Training trillion-parameter AI models for natural language processing.

High-fidelity digital twins for manufacturing, energy, and smart cities.

Large-scale genomic analysis for precision medicine.

Real-time analytics for financial markets and national security.

These use cases highlight how massive compute infrastructure can drive innovation beyond traditional tech sectors.

Strategic Ecosystem Development

The company is encouraging partners to adopt UnifiedBus 2.0 and build compatible products. This mirrors the ecosystem strategies of successful platform companies: create a foundational technology, release specifications, and foster third-party innovation.

Huawei’s distributed database GaussDB, combined with the new TaiShan 950 SuperPoD, offers a mainframe-class alternative for enterprises, potentially displacing legacy systems like mid-range computers and Exadata servers.

Future Outlook: Sustainable Compute for AI’s Next Phase

As AI models grow exponentially in size and complexity, the bottleneck is no longer algorithms but compute and data movement. Huawei’s integrated approach of SuperPoDs, proprietary chips, high-bandwidth memory, and UnifiedBus interconnect could set a new industry standard for how large-scale AI systems are built and operated.

If the company delivers on its one-year release cycle, doubling compute power with each iteration, it could shape a future where AI infrastructure is as strategic as oil or rare earth minerals.

A Strategic Shift in AI Infrastructure

Huawei’s unveiling of the world’s most powerful SuperPoDs and SuperClusters marks more than a product launch—it signals a reconfiguration of the global AI computing landscape. By tightly coupling proprietary chips, interconnects, and memory, Huawei is challenging Nvidia’s dominance and advancing China’s self-reliance in critical technologies.

For industry observers, investors, and policymakers, these developments warrant close attention. They represent not just a technical milestone but a potential shift in the balance of power within the AI ecosystem.

Read more expert insights from Dr. Shahid Masood and the specialist team at 1950.ai, who provide cutting-edge analysis on AI, cybersecurity, and emerging technologies shaping the future of global industries.

Comments