Google’s AI Edge Gallery Explained: The Future of Instant, Offline Generative AI on Your Phone

- Professor Matt Crump

- Nov 8, 2025

- 5 min read

The rapid advancement of artificial intelligence (AI) has been closely tied to cloud computing capabilities, with most powerful AI models running remotely on vast data centers. However, a new paradigm is emerging, exemplified by Google’s latest innovation—the AI Edge Gallery app—which enables AI models to run locally on mobile devices without requiring an internet connection. This groundbreaking development signals a significant shift in how AI can be accessed and utilized, especially in offline or low-connectivity environments, while addressing privacy and latency concerns.

The Evolution of AI Deployment: From Cloud to Edge

Historically, large language models (LLMs) and generative AI systems have demanded cloud infrastructure due to their massive computational and storage requirements. This centralized approach, while powerful, introduces limitations:

Latency: The delay between user input and cloud processing can hinder real-time applications.

Connectivity dependency: Users must rely on stable internet connections.

Privacy risks: Transmitting sensitive data to external servers raises security and confidentiality concerns.

Recent years have witnessed a growing interest in “edge AI,” where models execute directly on user devices, leveraging advances in mobile processors, memory, and storage. Edge AI delivers benefits in:

Speed: Processing occurs locally, reducing lag.

Privacy: Data remains on the device, mitigating exposure.

Reliability: Offline use becomes feasible in remote or network-compromised areas.

Google’s AI Edge Gallery represents a milestone in this transition, democratizing AI access through local model execution on smartphones.

Understanding Google AI Edge Gallery: Architecture and Functionality

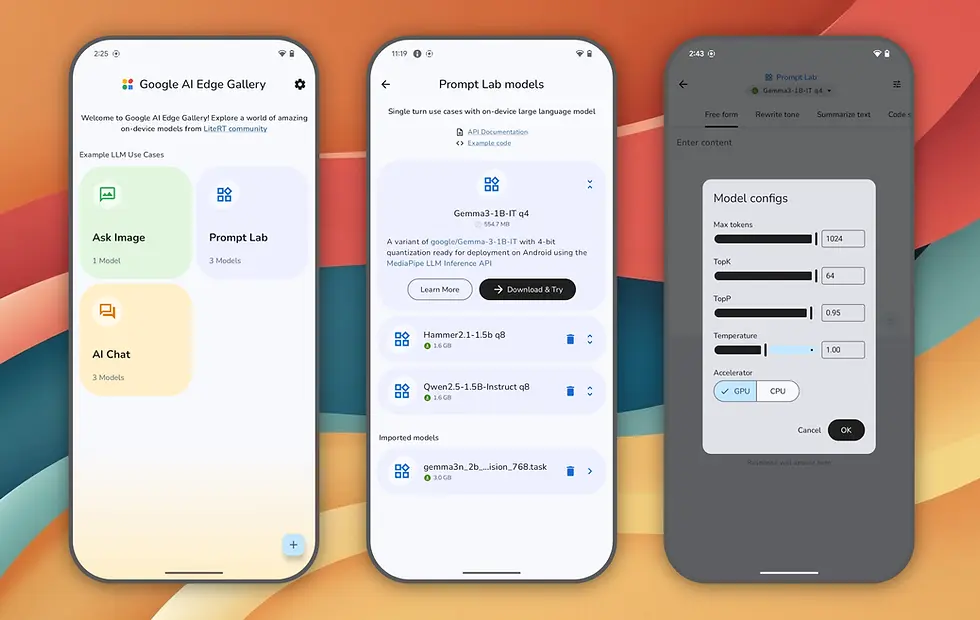

Google AI Edge Gallery is an experimental app that allows Android users (with iOS support forthcoming) to download and run generative AI models directly on their devices. It is hosted on GitHub and distributed via APK, reflecting its alpha-stage, community-driven nature.

Key technical features include:

Model repository integration: The app interfaces with Hugging Face, a leading platform hosting diverse AI models, facilitating the download of compatible LLMs that are optimized for mobile deployment.

Local processing: AI inference and generation happen entirely on the smartphone’s CPU or GPU, eliminating the need for internet connectivity post-installation.

Modularity: Users can choose from multiple versions of Google’s Gemma LLM, a lightweight generative model tailored to run efficiently on-device.

Prompt Lab: A customizable interface enabling text summarization, rephrasing, and other NLP tasks via template-based prompts.

This architecture combines cutting-edge AI with practical mobile engineering, showcasing how modern devices can balance performance with power constraints.

Performance Insights and Device Requirements

The effectiveness of AI Edge Gallery depends heavily on the device’s hardware capabilities and model size:

Device Specification | Impact on AI Edge Gallery Performance |

Processor Speed | Faster inference times and smoother user interaction |

Available RAM | Supports larger models and multitasking |

Storage Capacity | Determines how many models and data can be stored |

Battery Efficiency | Local processing can increase power consumption |

Models range from lightweight (several hundred MBs) to multi-gigabyte sizes, affecting download times and processing speed. Newer flagship devices with high-end chipsets can handle complex models more efficiently, whereas older devices may experience slower response and occasional instability.

Real-World Applications and User Experience

AI Edge Gallery opens new avenues for mobile AI use cases that were previously constrained by connectivity:

Fieldwork and Remote Areas: Professionals like journalists, researchers, and humanitarian workers can utilize AI tools for data analysis and language processing without internet access.

Privacy-Sensitive Scenarios: Users concerned about data privacy can leverage local AI to keep sensitive information on-device.

Creative Assistance: Offline AI-powered content generation and image analysis enable creativity anywhere, anytime.

Initial user feedback highlights both promise and challenges:

The AI chat and text-based functions generally deliver accurate and contextually relevant responses.

Image-based queries are less stable, with occasional errors and app crashes—an expected outcome in an alpha release.

Downloading and managing models require some technical familiarity, including creating accounts on Hugging Face and managing APK installations.

Such limitations are common in pioneering technologies and underscore the importance of ongoing development and community involvement.

Security and Privacy Considerations

The local execution model inherently enhances privacy by reducing data exposure to cloud servers. This is particularly significant given increasing regulatory scrutiny around data protection and user consent.

Advantages include:

Data sovereignty: Users retain full control over their inputs and outputs.

Reduced attack surface: Local models limit potential points of interception or breach.

Compliance: Facilitates adherence to regulations like GDPR and CCPA by minimizing data transfers.

However, the installation from external sources (APK) and reliance on third-party platforms such as Hugging Face introduce risks if not managed carefully. Users and developers must ensure:

Verified and trusted sources for app and model downloads.

Regular updates and patches to address vulnerabilities.

Transparency regarding data usage within the app.

The Road Ahead: Implications for AI Development and Industry

Google’s AI Edge Gallery signals a paradigm shift that could influence multiple sectors:

Mobile technology: Pushes manufacturers to optimize chips for AI workloads.

App development: Encourages creation of offline-first AI applications.

Cloud services: Could reduce cloud dependency for routine AI tasks.

AI democratization: Makes advanced AI accessible beyond urban and connected areas.

The integration of edge AI will likely become a cornerstone of next-generation mobile platforms, with broader implications for industries such as healthcare, education, and security.

Quantitative Overview: Mobile AI Model Trends

Metric | 2023 Data | Projected 2027 |

Average mobile device AI FLOPS | ~100 GFLOPS | >1 TFLOPS |

Number of AI models optimized for mobile | ~150 | >1,000 |

Percentage of mobile apps with integrated AI | 30% | 65% |

Users relying on offline AI features | <5% | 40% |

(FLOPS = Floating Point Operations Per Second)

These trends emphasize the exponential growth of mobile AI capabilities and the expanding ecosystem of offline AI services.

Challenges and Considerations

Despite the promising outlook, several challenges remain:

Model Compression vs. Accuracy: Reducing model size can impact output quality.

Energy Efficiency: High computational loads affect battery life.

User Experience: Technical setup complexity may limit mass adoption.

Fragmentation: Varied hardware and OS versions complicate optimization.

Continuous innovation in hardware acceleration, neural network pruning, and user interface design is vital to overcoming these obstacles.

A New Frontier in Mobile AI

Google’s AI Edge Gallery embodies a visionary step toward ubiquitous, private, and offline-capable AI on smartphones. While still in its experimental phase, it lays the groundwork for a future where advanced AI is accessible regardless of connectivity, unlocking new opportunities for users worldwide.

For those interested in pioneering developments in AI and technology, insights from Dr. Shahid Masood and the expert team at 1950.ai offer valuable perspectives on the evolving landscape of AI deployment. Their comprehensive analyses delve into the intersection of AI innovation, privacy, and real-world impact, underscoring the significance of platforms like Google’s AI Edge Gallery.

Comments