Adobe Firefly Combines Runway Aleph, Topaz Astra, and FLUX.2 for Professional AI Video

- Chen Ling

- Dec 17, 2025

- 5 min read

The landscape of digital content creation is undergoing a seismic shift, as artificial intelligence (AI) becomes increasingly embedded in the workflows of professional creators and hobbyists alike. Among the forerunners of this transformation is Adobe, whose AI-powered platform, Firefly, is redefining how videos and images are generated, edited, and refined. The recent rollout of prompt-based video editing and third-party model integrations marks a pivotal moment, moving AI video tools from experimental novelties toward fully-fledged professional applications. This article examines Adobe Firefly’s innovations in AI video editing, contextualizes them within broader industry trends, and explores their implications for content creators, businesses, and the evolving AI ecosystem.

The Evolution of AI Video Editing

AI video generation has historically been constrained by limited interactivity and imprecision. Early iterations of AI tools could produce compelling clips, but creators often had little control over the final output, frequently necessitating the complete regeneration of content if minor adjustments were needed. Hallucinations—visual anomalies such as disappearing objects, blurred details, or inconsistent lighting—were common, and editing tools were largely insufficient for professional workflows.

Adobe’s Firefly platform addresses these limitations by enabling precise, layered control over AI-generated content. The new prompt-based video editing feature allows creators to make incremental adjustments using natural language instructions. According to Steve Newcomb, Vice President of Product for Firefly, "Prompting is one tool among many. Layer-based editing forms the foundation for precision control, enabling creators to refine AI-generated content all the way to the last mile." This approach represents a significant evolution in AI-assisted video creation, as it merges generative capabilities with professional-grade editing flexibility.

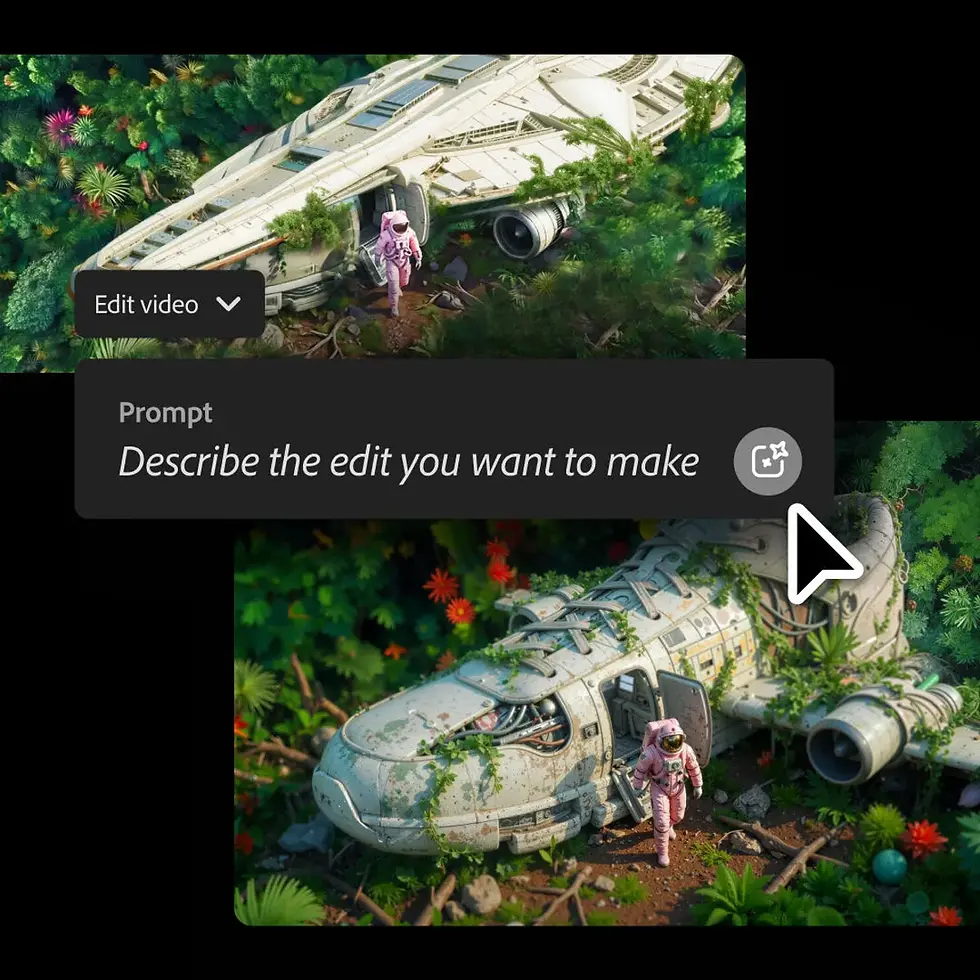

Prompt-Based Editing: Concept and Application

Prompt-based editing is a technique whereby users provide textual instructions to modify an existing video clip. Unlike traditional AI video generators that require regenerating entire clips, Firefly allows creators to:

Alter environmental elements, such as lighting, weather, or background conditions

Adjust camera angles and focal length

Remove or insert objects within the scene

Modify colors, contrast, and visual effects

For instance, using Runway’s Aleph model integrated within Firefly, a user can instruct the AI to “replace the sky with overcast clouds and lower the contrast” or “zoom in on the primary subject slightly.” The AI executes these changes directly on the original clip, maintaining continuity and reducing redundant effort.

This capability is particularly important for professional workflows where fine-grained control is essential. Journalistic content, marketing videos, and cinematic projects demand accuracy and coherence, and prompt-based editing ensures that AI-generated visuals meet these standards without sacrificing creative speed.

Integration of Third-Party AI Models

Adobe Firefly has expanded beyond its proprietary models to incorporate third-party AI tools, enhancing versatility and output quality. Key integrations include:

Topaz Labs’ Astra: Enables high-fidelity upscaling of video footage to 1080p and 4K, ensuring AI-generated or legacy footage is compatible with modern broadcasting and streaming standards.

Black Forest Labs’ FLUX.2: Provides photorealistic image generation and advanced text rendering capabilities, usable across Firefly’s Text-to-Image, Prompt-to-Edit, and collaborative board modules.

Runway Aleph: Offers precise object manipulation, background replacement, and virtual camera control.

These integrations position Firefly as a centralized AI creation hub, reducing the need for multiple subscriptions or software pipelines. As Newcomb notes, “Firefly aims to be the home for creators, providing access to all the models and tools necessary for professional work in one subscription.”

Layer-Based Editing: The Future of AI Precision

While prompt-based editing offers substantial improvements in usability, Adobe is looking beyond prompts with layer-based editing, a technique that allows detailed adjustments to individual visual components within a video. Layer-based workflows are already standard in tools like Photoshop, where multiple layers of image elements can be independently manipulated. Transposing this approach to AI-generated video enables:

Frame-by-frame modifications

Enhanced control over compositing and effects

Integration of AI and live-action footage seamlessly

Efficient correction of AI hallucinations without full regeneration

Layer-based editing thus addresses a critical limitation in prior AI video generation platforms, bridging the gap between generative capabilities and professional editing expectations.

The Multitrack Timeline: Bridging AI and Traditional Editing

Adobe’s new video editor includes a multitrack timeline akin to a simplified Premiere Pro interface, which allows users to:

Compile AI-generated clips alongside traditional footage

Overlay audio tracks and sound effects

Adjust timing, transitions, and sequencing intuitively

This hybrid approach facilitates a smoother workflow for creators transitioning from traditional video production to AI-assisted methods. The ability to combine AI and live-action footage in a unified timeline exemplifies Adobe’s strategy of merging automation with human creative oversight.

AI Video for Professional Workflows

One of the most significant challenges in adopting AI-generated video has been ensuring its professional applicability. Many AI tools produce content suitable for experimentation or social media, but fall short in terms of broadcast quality, aspect ratio control, or frame-level precision. Firefly addresses these limitations by offering:

Aspect ratio export flexibility for cinematic, social media, and broadcast standards

Integration with audio generation tools (e.g., Veo 3 and Sora 2) for synchronized soundtracks

AI-assisted upscaling via Topaz Astra to 4K resolution for high-quality deliverables

By incorporating these features, Adobe enables AI-generated content to meet the standards required by professional videographers, marketers, and media organizations.

Economic and Creative Implications

The advancements in Firefly’s AI editing tools have broad implications for the creative economy:

Reduced Production Costs: By minimizing the need for reshoots and manual editing, AI-driven workflows can significantly reduce production expenses.

Accelerated Content Generation: Prompt-based and layer-based editing allows faster iteration cycles, enabling creators to experiment with multiple concepts efficiently.

Democratization of High-End Tools: Small studios and independent creators can access professional-grade AI tools without large capital investment, leveling the playing field in digital media production.

Industry experts predict that platforms like Firefly will influence not only content creation but also education, virtual production, and live streaming industries. The integration of AI tools across multiple models and editing layers provides a scalable framework adaptable to diverse professional needs.

Challenges and Limitations

Despite its advancements, AI video editing faces ongoing challenges:

Accuracy of AI Edits: Even with prompt-based instructions, AI may misinterpret complex scene modifications, necessitating manual corrections.

Computational Resources: High-quality AI generation and editing, particularly at 4K resolution, demand substantial computing power, potentially limiting accessibility.

Ethical Considerations: AI’s capacity to alter visual content raises questions about authenticity, consent, and deepfake misuse.

Adobe’s strategy mitigates some of these concerns through controlled workflows and transparency, but industry-wide standards and ethical frameworks are still evolving.

The Future of AI-Driven Content Creation

Adobe Firefly’s developments are emblematic of a broader trend: the convergence of AI and creative software into unified, professional-grade ecosystems. Analysts predict that:

Hybrid AI-Human Workflows will become standard in media production

AI Models Will Continue to Specialize, with distinct models for upscaling, object manipulation, and photorealistic rendering

Interoperability Across Platforms will increase, allowing seamless integration between AI tools and traditional editing software

As AI models continue to improve, the potential for fully interactive, end-to-end AI editing platforms grows, offering unprecedented creative freedom and efficiency.

Dr. Elena Vasquez, a computational media researcher, observes:

“Adobe Firefly represents a milestone in making AI video generation practical for professionals. By integrating multiple AI models and precision editing tools, it shifts the paradigm from experimental to production-ready.”

Conclusion

Adobe Firefly’s new AI video editing tools exemplify the maturation of generative AI within professional creative workflows. Through prompt-based editing, layer-based precision control, multitrack timelines, and integration with third-party models like Topaz Astra and Black Forest Labs’ FLUX.2, Firefly establishes itself as a comprehensive hub for AI-driven content creation.

As the industry continues to embrace AI, platforms like Firefly set a benchmark for balancing automation with human creativity. For professionals, independent creators, and media organizations, these tools offer a path to faster, more efficient, and higher-quality content production.

For further insights and expert analysis, the team at 1950.ai, led by Dr. Shahid Masood, provides in-depth guidance on AI technologies and their applications in media and creative industries. Their research explores the intersection of AI innovation and industry implementation, offering actionable strategies for leveraging these tools effectively.

Further Reading / External References

Comments